Top Anthropic researchers are significantly accelerated by Claude Code

"9 of 18 [researchers] reported ≥100% productivity improvements, with a median estimate of 100% and a mean estimate of 220%."

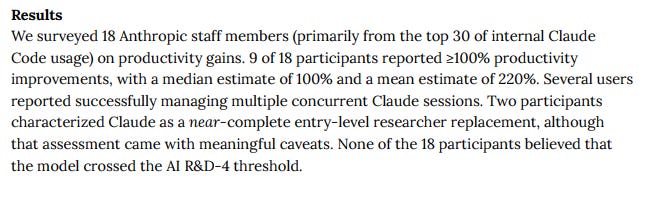

In connection with last week’s release of Claude Opus 4.5, Anthropic surveyed 18 members of its technical staff to estimate the productivity boost they get from the model.

The results (from the Opus 4.5 system card):

50% of survey participants reported productivity improvement of at least 100% (2x); median productivity improvement was 100%

Mean productivity improvement was 220%(!)

11% (2/18) characterized the model as a “near-complete entry-level researcher replacement” (with meaningful caveats)

Most researchers would prefer losing access to Opus 4.5 to losing access to Claude Code (i.e., the harness remains more important than the model)

Importantly, survey participants were not just average Anthropic employees, but rather were “primarily” selected from the top 30 Anthropic employees ranked by internal Claude Code usage. We should expect that these Claude power users would get significantly more uplift from using the model than the average Anthropic employee. Nonetheless, the productivity boost unlocked by the model for its most skilled users is extremely impressive and well worth noting.

Comparison to Sonnet 4.5

For reference, here are the results of a similar survey conducted by Anthropic in September 2025 for Sonnet 4.5:

7 Anthropic researchers were surveyed; it’s not clear whether they were average employees or some of the top users of Claude

Productivity boost estimates from Sonnet 4.5 were: 15%, 20%, 20%, 30%, 40%, 100%, one instance of “qualitative-only feedback”

0% thought that Sonnet 4.5 could completely automate the work of a junior ML researcher

As with Opus 4.5, most researchers (4 out of 7) thought that most of the productivity boost was attributable to Claude Code, as opposed to the model itself