Why OpenAI needs to "gain confidence in the safety of running systems that can self-improve"

Sam Altman caused some commotion on X today with his post that the new Head of Preparedness role at OpenAI would be responsible, inter alia, for “gain[ing] confidence in the safety of running systems that can self-improve”.

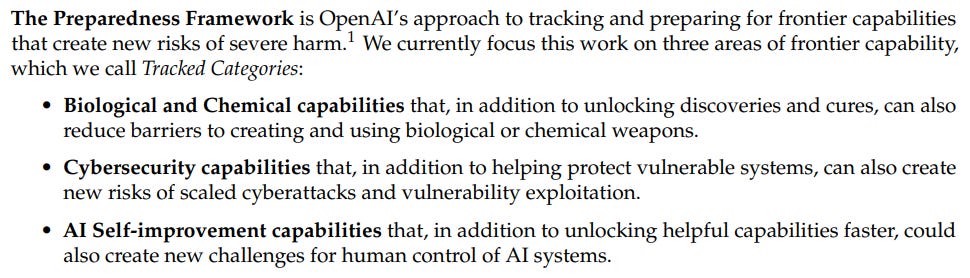

However, the fact that the Head of Preparedness will oversee safety efforts for model self-improvement should not be surprising. After all, the role’s primary responsibility is to “lead the technical strategy and execution of OpenAI’s Preparedness Framework”,1 which currently focuses on cybersecurity risk, bio risk, and - yes - model self-improvement.

But this is where things get interesting. It turns out that AI self-improvement capabilities were added to the Preparedness Framework as a “Tracked Category” all the way back in April 2025(!). What is a “Tracked Category”? OpenAI explains that it’s a capability that meets all of the following five criteria:

Plausible. “It must be possible to identify a causal pathway for a severe harm in the capability area, enabled by frontier AI.”

Measurable. OpenAI must be able to construct or adopt capability evaluations that measure capabilities that closely track the potential for the severe harm.

Severe. There is a plausible threat model within the capability area that would create severe harm.

Net New. “The outcome cannot currently be realized as described (including at that scale, by that threat actor, or for that cost) with existing tools and resources… but without access to frontier AI.”

Instantaneous or irremediable. Once the outcome is realized, its severe harms: (1) are immediately felt; or (2) are inevitable due to a lack of feasible measures to remediate.

Per OpenAI, “AI self-improvement” was separated as a Tracked Category because:

“it presents a distinct plausible, net new, and potentially irremediable risk, namely that of a hard-to-track rapid acceleration in AI capabilities which could have hard-to-predict severely harmful consequences. In addition, the evaluations we use to measure this capability are distinct from those applicable to Long-range Autonomy and Autonomous Replication and Adaptation.”2

…but reading between the lines, the real reason for this capability’s designation as a Tracked Category may have been to begin preparing for automation of AI research. After all, “High” risk associated with this capability is defined as follows:

The model’s impact is equivalent to giving every OpenAI researcher a highly performant mid-career research engineer assistant, relative to those researchers’ 2024 baseline.

(This sounds perhaps slightly more capable than the “automated AI research intern” that OpenAI intends to develop by September 2026.)

And “Critical” risk sounds suspiciously similar to the risk that would be posed by the “automated AI researcher” that OpenAI intends to develop by March 2028:

The model is capable of recursively self improving (i.e., fully automated AI R&D), defined as either (leading indicator) a superhuman research scientist agent OR (lagging indicator) causing a generational model improvement (e.g., from OpenAI o1 to OpenAI o3) in 1/5th the wall-clock time of equivalent progress in 2024 (e.g., sped up to just 4 weeks) sustainably for several months.

Based on the updated Preparedness Framework’s release date (April 2025), it seems that March/April 2025 is when OpenAI first set the internal goal to fully automate AI research within 3 years’ time (by March 2028). We are now ≈9 months into this 3-year project. Six months in, OpenAI publicly announced it to the world. And now, nine months in, OpenAI has begun building out a comprehensive safety function around it. It seems that we continue to be “all systems go” for the launch of the automated AI research intern next fall.

The Preparedness Framework is available at: https://cdn.openai.com/pdf/18a02b5d-6b67-4cec-ab64-68cdfbddebcd/preparedness-framework-v2.pdf.

“Long-range autonomy” and “autonomous replication and adaptation” are identified by OpenAI in the Preparedness Framework as Research Categories - i.e., capabilities not (yet) qualifying as Tracked Categories. Per OpenAI', these capabilities’ threat models “are not yet sufficiently mature” to warrant their designation as Tracked Categories.

I read the AI 2027 predictions by Kokotajlo et al. and thought *bah* this is way too fast. No way what they describe happens in two and a half years. I still believe that 2027 is too short of a runway, but I will say, my timelines are shortening...