The Gentle Singularity; The Fast Takeoff

On June 10, 2025, Sam Altman published a blog post entitled “The Gentle Singularity”, in which he wrote that “[w]e are past the event horizon; the takeoff has started”.

This blog post gathered some attention, and its ideas have since been mindlessly copied by others. Mark Zuckerberg claimed a few days later that “[o]ver the last few months we have begun to see glimpses of our AI systems improving themselves”.1 More recently, Elon Musk, too, said that we have entered the singularity.

It has typically been assumed that these claims have been principally driven by the generally fast rate of improvement in AI models (i.e., “AI is improving fast today; AI will improve even faster tomorrow”). With respect to Altman’s claims specifically, I am of a different view. I believe that Altman meant something very specific when he said that “we are past the event horizon”, and that this “something” is the most important thing happening in AI today.

Codex

On May 16, 2025 (a few weeks before Altman’s blog post), OpenAI released its agentic coding tool, Codex. The release flew a bit under the radar, overshadowed by the previous month’s release of o3 and endless speculation about the then-impending releases of o3-pro and OpenAI’s open-source models. But no matter. The coding agent, which was OpenAI’s answer to Claude Code, released just three months earlier, was merely the first step on OpenAI’s path to full automation of AI research.

OpenAI likely set out on this path in or around March 2025, just a few weeks after Anthropic’s release of Claude Code. This is why OpenAI’s Preparedness Framework was updated to include recursive self-improvement (RSI) as a Tracked Category in April 2025. Other circumstantial evidence also points to the project’s launch in March 2025: OpenAI’s goal of developing a fully automated AI researcher falls exactly three years later (March 2028), and its mid-way goal of developing an automated AI research “intern” falls exactly mid-way through this three-year process (September 2026, or 18 months after March 2025).

Even OpenAI insiders were initially not convinced by Codex until a much more powerful version arrived with August’s release of GPT-5:

By September 2025, OpenAI began leaking that an automated AI researcher has become the focus of its entire research program. Here’s Jacub Pachocki explaining that OpenAI has been building most of its projects with the goal of achieving an automated AI researcher:

Our set goal for our research program has been getting to an automated researcher for a couple years now. And so we’ve been building most our projects with this goal in mind.

The following month, OpenAI officially announced to the world that it is focusing on developing the automated AI research “intern” by September 2026 and the fully automated AI researcher by March 2028. Sam Altman added that the “intern” will run on hundreds of thousands of GPUs.

Since this announcement, OpenAI has repeatedly stressed that automated AI research is now its primary focus. “We’re very excited about our 2026 roadmap and advancing work toward an automated scientist,” Mark Chen said just yesterday.

Again, the path towards fully automated AI research starts with Codex. This is clear, e.g., from this description of the “intern” from Lukasz Kaiser:

Where AI researchers have great hope to help themselves... is that if you could just say ‘hey, Codex, this is the idea, and it’s fairly clear what I’m saying, please just implement it so it runs fast on this 8-machine setup or 100-machine setup’. I think that’s what OpenAI [means by] an AI intern by the end of next year.

Claude Code

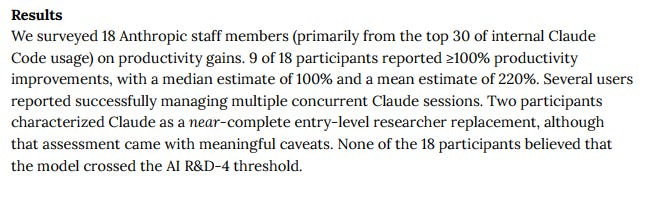

Not surprisingly, Anthropic views Claude Code in exactly the same way as OpenAI views Codex - i.e., as a coding tool that will eventually lead to automation of AI research. Indeed, Sonnet 4.5 and Opus 4.5 system cards conspicuously included results of surveys of Anthropic employees designed to evaluate whether the model, paired with Claude Code, is good enough to fully replace a junior AI researcher. In the Opus 4.5 survey, two (2) out of 18 participants classified Opus 4.5 as a “near-complete entry-level researcher replacement” - albeit with “meaningful caveats”.

This is also why we’ve heard Sholto Douglas speak about withholding models with capabilities to perform AI research from Anthropic’s competitors:

As AI models get better at [machine learning research tasks], I do expect the labs to to hold back some of the the capabilities. If a model's capable of writing out a whole new architecture that's a lot better, even if it's just capable of writing all their kernels for them, you probably don't want to release that to your competitors.

And what is “the main thing” that Jack Clark worries about these days? But of course, closing the loop on AI R&D, which would lead to RSI:

The main thing I worry about is whether people succeed at 'building AI that builds AI'—fully closing the loop on AI R&D (sometimes called recursively self-improving AI).

Clark notes that “extremely early signs” of AI getting better at doing components of AI research can already be seen, “ranging from kernel development to autonomously fine-tuning open-weight models”.

Recursive Self-Improvement and the Takeoff

But why does automating AI research matter? Turning again to Jack Clark, the key is “compounding R&D advantage” from automated AI research. The premise is that an AI researcher would be able to build an even better (and smarter) AI researcher, which, in turn, would be able to build yet another better and smarter AI researcher. Automated intelligence could quickly lead to automated superintelligence - and, eventually, to systems so much smarter than humans that a human researcher would not be able to even understand the new discoveries being made by AI, much less keep up with it:

If this stuff keeps getting better and you end up building an AI system that can build itself, then AI development would speed up very dramatically and probably become harder for people to understand.

This is “the takeoff”.

Parting Thoughts on the Race to AGI

The above considerations lead us to the most critical insight of all vis-a-vis the race to AGI. If OpenAI and/or Anthropic succeed in fully automating AI research, there is a chance that the “takeoff” shall occur, with the result that no other lab shall ever be able to catch up to models built by these labs. In the “takeoff” scenario, even a large team staffed with the very best human AI researchers will never be able to compete with a model capable of superhuman AI research, and the advantages will only compound from there. A rival lab might reach automated AI research at a later date, but by then it will be too late - its model will not be able to compete with the much more advanced AI researchers compounding faster being operated by the other labs.

Assuming that one believes in this version of the take-off,2 that should lead one to also believe that a chasm is already developing between those labs that are racing to automate AI research and those that are not.

Zuckerberg was later forced to admit that this referred not to RSI, but rather to to an autonomous agent built by Llama 4 that had successfully checked in some changes to the Facebook algorithm.

To be clear, there are plenty of reasons to doubt that the takeoff will occur in exactly this fashion. For example, speed of automated AI research may wind up being bounded by compute or energy constraints. Alternatively, it is possible that AI models will not recursively self-improve infinitely, but instead will quickly reach some upper bound of intelligence - in which case it will not take too much time for others to catch up to their level. Finally, the goal of automating AI research may prove to be a red herring, an expensive mistake not leading to capabilities significantly better than those of a human researcher.

Interesting stuff. I have to say that based on my work and side project experience (although I don't work at a lab) I'm a convert to the O-ring theory that the speed and outcome of a process is typically limited by its weakest link. It's basically just standard operations thinking, now that I think about it.

There are reasons you can't win with unlimited human interns or ICs now, and some of those apply to AI as well. When they fail at making a correct decision, more interns or AI agents typically don't resolve that bottleneck. You need a senior person or group to figure it out. If you have a well defined reward function, you can brute force it like RL, but that's relatively uncommon in real life. You may have it for certain tasks, but you rarely have it for full jobs.

So then let's say we can fully automate 50% of an AI researcher's job. That gets you a 2X speed-up at best because you still have the remaining 50% of the job, and you probably lose some speed-up because now you end up doing additional tasks you weren't doing before (Jevons paradox). You need meaningful new capabilities to automate beyond the 50%, you can't just get it through applying what you have. You're now on some kind of exponential improvement curve, but you don't know over what time frame. And if the inputs required for further automation are also growing exponentially, perhaps at a faster rate than your improvements, then the next automation steps will actually take longer despite your exponential productivity curve.

So I think we've on the curve, but progress may not get subjectively faster.

Loved the clear writing.